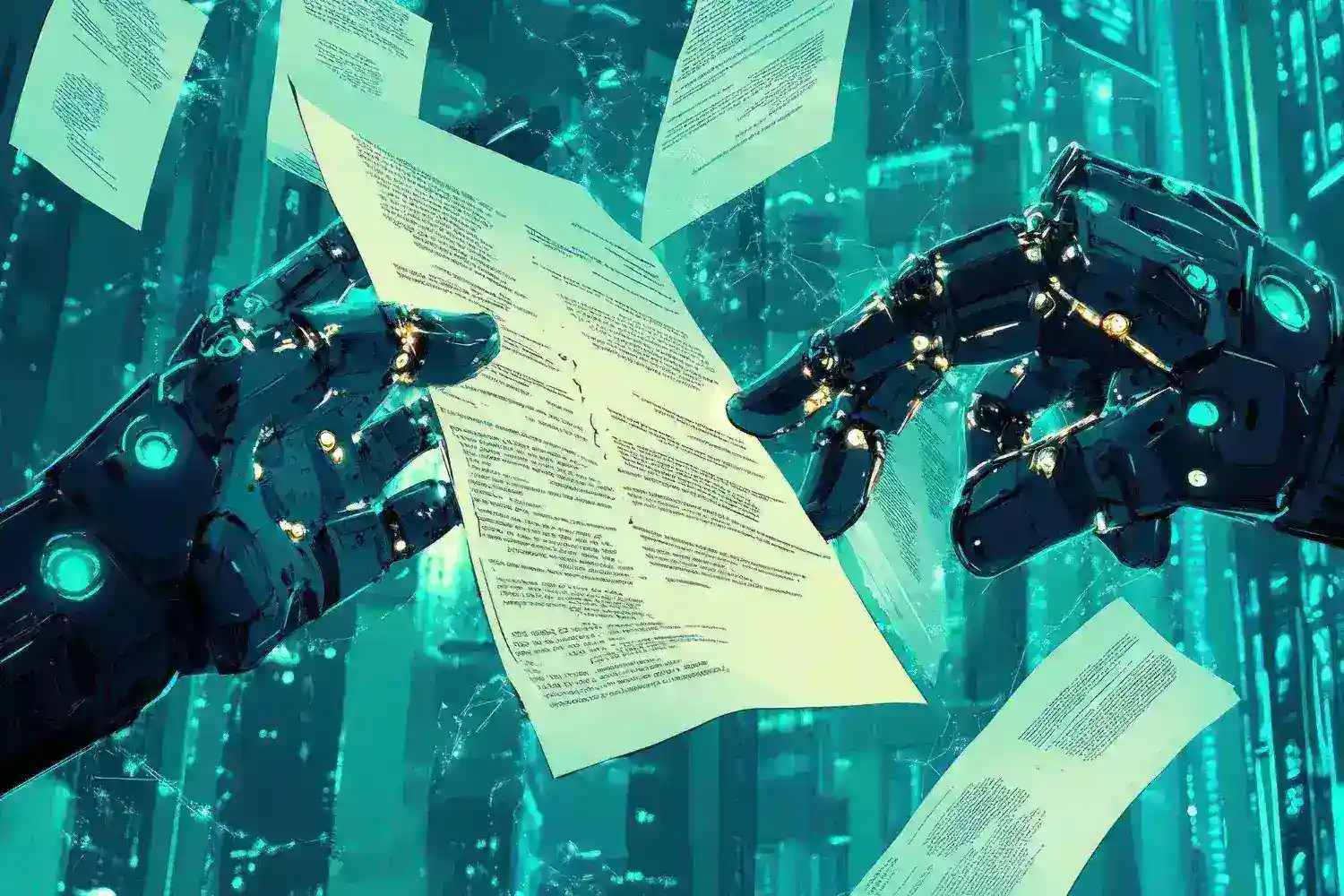

Automating Optical Character Recognition (OCR) over dozens—or even thousands—of scanned pages can save you hours of manual entry. Whether you’re digitizing legal contracts, invoices, or archival records, a batch OCR pipeline transforms a stack of images or PDFs into indexed, searchable text in minutes. By combining open-source tools like Tesseract with simple scripting, robust error handling, and parallel processing techniques, you’ll build a system that handles varied scan quality, recovers gracefully from failures, and scales to whatever volume you need. These lifehacks will guide you through optimizing images, scripting bulk OCR runs, implementing retry logic, and assembling the output into consolidated documents and databases.

Optimize Scans for High-Throughput Accuracy

Before kicking off a bulk OCR job, ensure each document is primed for recognition. Automate image preprocessing with tools like ImageMagick or OpenCV: convert multi-page PDFs to 300 DPI TIFFs, deskew rotated pages using Hough transforms, apply adaptive thresholding to binarize text, and crop unnecessary margins. Write a small script that iterates over your scan directory, tags each file with a “ready” suffix once preprocessed, and skips files below a size threshold—indicating empty or blank pages. By performing these steps in batch, you not only boost Tesseract’s accuracy but also eliminate problematic scans before they reach your OCR engine, reducing the number of retries and manual cleanups later.

Script Bulk OCR Runs with Parallel Execution

Tesseract’s command-line interface lends itself to automation. Create a shell or Python wrapper that walks through your “ready” files and invokes Tesseract with optimal parameters—such as –oem 1 –psm 3 -l eng+fra for mixed-language documents—outputting plain text and hOCR files side by side. To leverage multi-core processors, split the workload into equal-sized chunks and dispatch parallel jobs via GNU Parallel or Python’s multiprocessing module, ensuring you saturate CPU resources without overloading memory. Include logging that records each file’s start time, end time, and exit code so you can easily identify failures. This scripting lifehack turns what would be a serial, hours-long process into a rapid, scalable conversion that finishes in a fraction of the time.

Implement Robust Retry and Error-Handling Logic

Even the best scans and scripts encounter occasional hiccups—corrupted PDFs, unsupported fonts, or out-of-memory errors. Build retry logic into your wrapper: if Tesseract exits with a nonzero code, re-enqueue the file into a “retry” queue, optionally with adjusted parameters (lower resolution or different PSM). Cap retries at a sensible maximum (e.g., two attempts) and move permanently failed documents into a “needs manual review” folder, sending an alert via email or Slack webhook. For long-running batches, implement checkpointing by writing processed filenames to a status file—allowing you to resume from the last successful file after interruptions. These error-handling lifehacks ensure your batch OCR pipeline runs unattended overnight, surfacing only genuinely problematic scans for human intervention.

Assemble and Integrate OCR Outputs Automatically

Once text is extracted, consolidate and index your results seamlessly. Use a script to merge individual .txt outputs into per-document files, inserting page-break markers or metadata headers (filename, page number, and timestamp). For searchable archives, import the text into an Elasticsearch index or a SQL database—automate this via REST API calls or bulk loaders. If you need formatted outputs, generate tagged PDFs by overlaying hOCR layers back onto original images using tools like hocr-pdf. Finally, clean up intermediate files to reclaim disk space. By automating the final assembly and integration steps, you transform raw OCR text into immediately usable documents and search interfaces—completing a truly end-to-end batch digitization workflow.